Figures from the History of Probability and Statistics

John Aldrich, University of

Southampton, Southampton, UK. (home)

Notes on the work of

A further 200+ individuals are mentioned below. Use Search on your browser to find the person you are interested in. It is also worth searching for the ‘principals’ for they can pop up anywhere.

The entries are arranged chronologically, so the document can be read as a story. These are the date markers

with people placed according to

date of their first impact. Do not take the placings too seriously and remember

that a career may last more than 50 years! At

each marker there are notes on developments in the following period. There is

more about

For further on-line information there are links to

· Earliest Uses (Words and Symbols) for details (particularly detailed references) on the topics to which the individuals contributed. (The Words site is organised by letter of the alphabet. See here for a list of entries)

· MacTutor for fuller biographical information on the ‘principals’ (all but three) and on a very large ‘supporting’ cast. The MacTutor biographies also cover the work the individuals did outside probability and statistics. The MacTutor and References links are to these pages. There is an index to the Statistics articles on the site.

· ASA Statisticians in History for biographies of mainly recent, mainly US statisticians.

· Life and Work of Statisticians (part of the Materials for the History of Statistics site) for further links, particularly to original sources.

· Oscar Sheynin’s Theory of Probability: A Historical Essay An account of developments to the beginning of the twentieth century, particularly useful for its coverage of Continental work on statistics.

· Isaac Todhunter’s classic from 1865 A History of the Mathematical Theory of Probability : from the Time of Pascal to that of Laplace for detailed commentaries on the contributions from 1650-1800. The coverage is extraordinary and the entries are still interesting—even their humourlessness has a certain charm.

·

The Mathematics

Genealogy Project, abbreviated MGP, which

is useful for tracking modern scholars. The PhD degree is a relatively recent

development and in the

· Wikipedia for additional biographies. This is an uneven site but it has some useful articles.

The entries contain references to the following histories and books of lives. See below for more literature.

·

Ian Hacking The

Emergence of Probability,

·

Stephen M Stigler The

History of Statistics: The Measurement of Uncertainty before 1900,

·

Anders Hald A History of

Probability and Statistics and their applications before 1750,

·

Anders Hald A History of

Mathematical Statistics from 1750 to 1930,

·

Jan von Plato Creating

Modern Probability,

·

Leading Personalities in Statistical Sciences from the Seventeenth

Century to the Present, (ed. N. L. Johnson and S. Kotz) 1997.

·

Statisticians of the

Centuries (ed. C. C. Heyde and E. Seneta) 2001.

·

Encyclopedia of Social

Measurement (ed. K. Kempf-Leonard) 2004.

On the web (see also online biblios and texts below)

· Stochastikon Encyclopedia. Articles in English and German.

· Portraits of Statisticians on the Materials for the History of Statistics site.

· Sources in the History of Probability and Statistics by Richard J. Pulskamp.

· Tales of Statisticians. Vignettes by E. Bruce Brooks.

·

History

of Statistics and Probability 18 short biographies from

· Glimpses of the Prehistory of Econometrics. Montage by Jan Kiviet.

·

Probability

and Statistics Ideas in the Classroom: Lesson from History.

Comments on the uses of history by D.

· The History of Statistics in the Classroom. Thumbnail sketches of Gauss, Laplace and Fisher by H. A. David.

· Milestones in the History of Thematic Cartography, Statistical Graphics, and Data Visualization. Encyclopaedic coverage by M. Friendly & D.J. Denis.

·

Actuarial

History. A very

comprehensive collection of links, created by Henk Wolthuis, not only to actuarial science and

demography, but to statistics as well.

To help place individuals I have used modern terms for occupation (e.g. physicist or statistician). For the earlier figures these terms are anachronistic but, I hope, not too misleading. I have not given nationality as people move and states come and go. MacTutor has plenty of geographical information.

1650-1700 The origins of probability and statistics are usually found

in this period, in the mathematical treatment of games of chance and in the

systematic study of mortality data. This was the age of the Scientific

Revolution and the biggest names, Galileo

(Materials

and Todhunter

ch.I (4-6).) and Newton

(LP) gave some thought to probability without apparently

influencing its development. For an introduction to the Scientific

Revolution, see Westfall’s Scientific

Revolution (1986).

·

There

were earlier contributions to probability, e.g. Cardano

(1501-76) gave some ‘probabilities’ associated with dice throwing, but a

critical mass of researchers (and results) was only achieved following

discussions between Pascal

and Fermat

and the publication of the first book by Huygens. Hacking Chapters

1-5 discusses thinking before Pascal. James Franklin’s The Science of Conjecture:

Evidence and Probability Before Pascal (2001) examines this earlier

work in depth. A recent issue of the JEHPS is devoted to Medieval

probabilities.

·

Statistics

in the form of population statistics was created by Graunt. Graunt’s friend William Petty

gave the name Political Arithmetic

to the quantitative study of demography and economics. Gregory King

was an important figure in the next generation. However the economic line

fizzled out. Adam Smith, the most influential C18 British economist,

wrote, “I have no great faith in

political arithmetic...” Wealth of Nations (1776) B.IV,

Ch.5, Of Bounties.

·

A form of life insurance mathematics was developed from Graunt’s work on the life table by the

mathematicians Halley,

Hudde

and de

Witt. Many later ‘probabilists’ wrote on actuarial matters,

including de Moivre, Simpson,

Price, De

Morgan, Gram,

Thiele,

Cantelli,

Cramér and de

Finetti. In the C20 the Skandinavisk aktuarietidskrift and the Giornale dell'Istituto Italiano degli

Attuari were important journals for

theoretical statistics and probability. Actuarial questions and

friendship with the actuary G.

J. Lidstone stimulated the Edinburgh mathematicians, E.

T. Whittaker and A.

C. Aitken (MGPP),

to contribute to statistics and numerical

analysis. The C17 work is discussed by

Hacking (1975): Chapter

13, Annuities. See also Chris Lewin’s The Creation of

Actuarial Science and the other historical links on the International

Actuarial Links

page. There are historical articles in the Encyclopedia of Actuarial Science.

Classics are reprinted in History

of Actuarial Science. There is a nice review of the early

literature in the catalogue

of the Equitable Life Archive.

·

New

institutions,

rather than the traditional universities, underpinned these developments. In

Life & Work has links to the writings of many of these people. For the period generally see Todhunter ch. I-VI (pp. 1-55) and Hald (1990, ch. 1-12).

|

|

Blaise Pascal (1623-1662) Mathematician and philosopher. MacTutor References SC, LP. Pascal

was educated at home by his father, himself a considerable mathematician. The

origins of probability are usually found in the correspondence

between Pascal and Fermat where they treated several problems associated

with games of chance. The letters were not published but their contents were

known in Parisian scientific circles. Pascal’s only probability publication

was the posthumously published Traité du

triangle arithmétique (1654, published in 1665 and so after Huygens’s work); this treated Pascal’s

triangle with probability

applications. Pascal introduced the concept of expectation and discussed the problem of gambler’s

ruin. Pascal’s

wager, is now often read as a pioneering analysis of

decision-making under uncertainty although it appeared, not in his

mathematical writings, but in the Pensées, his reflections on

religion. The last chapter of the Port-Royal

Logic pp.

365ff by Pascal’s friends Arnauld

and Nicole has a brief treatment of the use of probability in decision making,

with an allusion to the wager. See Ben Rogers Pascal's

Life & Times, Life

& Work, A.W.F.

Edwards on the triangle and Todhunter

ch.II (pp. 7-21). See also Hald 1990, chapter 5, The

Foundations of Probability Theory by Pascal and Fermat in 1654 and Hacking

1975, chapter

7, The |

|

Christiaan

Huygens (1629-94) Mathematician and physicist. MacTutor

References SC, LP. As a youth Huygens was expected

to become a diplomat but instead he became a gentleman scientist, making

important contributions to mathematics, physics and astronomy. He was educated at the |

|

No authentic portrait of Graunt is known |

John Graunt (1620-74) Merchant. Wikipedia. SC, LP, ESM. Graunt is unique among the figures described here in

not having had a university education. He published only one work, Observations

Made upon the Bills of Mortality (1662). However, through

this work and his friendship with William Petty,

he became a fellow of the Royal Society of London and his work became known

to savants like Halley.

The weekly bills of mortality, which had been collected since 1603, were

designed to detect the outbreak of plague. Graunt

put the data into tables and produced a commentary on them; he does basic

calculations. He discusses the reliability of the data. He compares the

number of male and female births and deaths. In the course of Chapter XI on

estimating the population of London Graunt produces a primitive life table see the JIA

articles by Glass, Renn, Benjamin and Seal. The life table became one of the

main tools of demography and insurance mathematics. Halley produced a life

table using data from Caspar

Neumann (SC) in |

1700-50

The great leap forward

is Hald’s (1990) name for the decade 1708-1718: there were so many important

contributions to such a greatly expanded subject. The roots of probability and

statistics were quite distinct but by the early C18 it was understood that the

subjects were closely related.

·

Jakob Bernoulli’s Ars Conjectandi, like Arnauld’s

Logique (1682) pp.

365ff, suggested a conception of probability broader than that

associated with games of chance. Bernoulli’s

law of large numbers

provided a theory to link between probability and data. See Hacking

(1975): Chapters

16-7.

·

Montmort’s

(SC, LP) Essay

d'analyse sur les jeux de hazard (1708) and de Moivre’s Doctrine of

Chances (1718) produced many new results on games of chance, greatly

extending the work of Pascal

and Huygens.

·

Arbuthnot’s (SC, LP) 1710 paper An Argument

for Divine Providence, taken from the constant Regularity observed in the

Births of both Sexes used a significance test (sign test) to establish

that the probability of a male birth is not ½. The calculations were refined by

'sGravesande (LP)

and Nicholas

Bernoulli (LP). Apart from being an early application of probability

to social statistics, Arbuthnot’s paper illustrates the close connection

between theology

and probability

in the literature of the time. The work of John

Craig provides another example.

·

Consideration

of the valuation of a risky prospect, dramatised by the St. Petersburg

paradox (formulated by Nicholas

Bernoulli in 1713 and discussed by Gabriel

Cramer) led to Daniel Bernoulli’s (1737)

theory of moral expectation (or expected utility).

See Life & Work for writings by Montmort, Euler, Lagrange, etc. For the period see Todhunter ch. VII-X (pp. 56- 212) Hald (1990, ch. 1-12), Hacking Chapter 16-9.

|

|

Jakob (James) Bernoulli (1654-1705)

Mathematician. MacTutor

References SC, LP, ESM. Eight members of the Bernoulli family have

biographies in MacTutor

(family

tree) and several wrote on probability. The most important

contributors were Jakob, Daniel and Niklaus.

Jakob and his younger brother Johann

were the first of the mathematicians. Jakob studied philosophy at the |

|

|

Abraham de Moivre (1667-1754) Mathematician MacTutor References SC, LP. De Moivre came to |

|

|

Daniel Bernoulli (1700-1782) Mathematician and physicist MacTutor References SC, LP. Daniel

Bernoulli, a nephew of Jakob Bernoulli, was educated at the

|

1750-1800 Probability

established itself in physical science, in astronomy its most developed branch. The most enduring

of these applications to astronomy treated the combination of observations. The resulting theory of errors was the most important ancestor of modern

statistical inference, particularly of estimation theory.

·

The

major mathematician/astronomers, including Daniel Bernoulli, Boscovich,

Euler,

Lambert,

Mayer

and Lagrange,

treated the problem of combining astronomical observations, “in order to

diminish the errors arising from the imperfections of instruments and the

organs of sense” in the words of Thomas

Simpson. Simpson introduced the idea of postulating an error distribution. See Hald (1998, Part I Direct

Probability, 1750-1805) and Richard J. Pulskamp’s Sources in the History of

Probability and Statistics.

·

More

tests of significance

were developed, mainly for use in astronomy, see Daniel Bernoulli

and also John

Michell (1767) Crossley, who calculated the odds that the

Pleiades is a system of stars and not a random agglomeration. See Hald

(1998): Part I Direct Probability, 1750-1805).

·

Interval

statements about the parameter of the Binomial distribution—ancestors of the

modern confidence

interval—were produced by Lagrange and Laplace in the 1780s.

·

In

the 1770s Condorcet

started publishing on social mathematics, largely the application of

probability to the decisions of juries and other assemblies. His work had a

strong influence on Laplace and Poisson. Other French authors from this period included D’Alembert

and Buffon;

the former is best remembered for his critical remarks on probability and the

latter for his needle experiment.

·

An important development in

probability theory was work on conditional

probability with applications to inverse probability or Bayesian inference by

Bayes and Laplace. See Hald

(1998): Part II Inverse Probability.

See Todhunter ch. XI-XIX and Stigler (1986): Part I, The Development of Mathematical Statistics in Astronomy and Geodesy before 1827. For this period and the next see also Lorraine Daston (1988) Classical Probability in the Enlightenment.

|

No authentic portrait of Bayes is known (for an unlikely possibility see here) |

Thomas Bayes (1702-1761) Clergyman and mathematician. MacTutor

References SC, LP, ESM. Bayes

attended the |

|

|

Pierre-Simon Laplace (1749-1827) Mathematician and physicist MacTutor

References SC, LP, ESM. |

1800-1830 The contrasting

figures of Laplace and Gauss dominate this period.

·

Work on the theory of errors reached a climax

with the introduction of the method

of least squares. The method was published by Legendre

in 1805 and within twenty years there were three probability-based

rationalisations, Gauss’s Bayesian argument (see uniform prior), Laplace’s argument based on the central limit theorem

and Gauss’s Gauss-Markov

theorem. Work continued through the C19 with numerous mathematicians and

astronomers, contributing, including Cauchy

(Cauchy distribution), Poisson,

Fourier,

Bienaymé,

Bessel

(probable error), Encke, Peters

(Peters' method), Lüroth,

Robert Ellis,

Airy,

Glaisher,

Chauvenet

and Newcomb. (The Cauchy distribution

first appeared as an awkward case for the theory of errors.) Pearson, Fisher

and Jeffreys were taught the theory of errors by

astronomers. In the C20 astronomers, including Eddington,

Kapteyn

and Charlier,

also investigated the statistical properties of constellations, picking up from

the middle of the C18. (above)

·

Gauss found a second important

application of least squares in geodesy. Geodesists

made important contributions to least squares, particularly on the

computational side—not surprisingly as the calculations could be on an

industrial scale. The eponyms, Gauss-Jordan

and Cholesky MacTutor,

honour later geodesists. Helmert

(Helmert's transformation)

and Paolo Pizzetti

were geodesists who contributed to the theory of errors. At least one

important C20 statistician started as a surveyor, Frank

Yates, Fisher’s colleague and successor at

Rothamsted.

·

In

·

Around this time William Playfair

was finding new ways of representing data graphically but nobody was paying

attention. Techniques slowly accumulated over the next 150 years without the

idea of graphical statistics as a study in its own right gaining ground. That

idea is quite recent and mainly associated with Tukey. See

Milestones.

·

The age of the academies

was over and from now on the main advances took place in universities. The

French education system was transformed in the course of the Revolution and the

C19 saw the rise of the German university.

See Stigler (1986): Part

I, The Development of Mathematical Statistics in Astronomy and Geodesy before

1827 and Hald (1998): Part III The Normal Distribution, the

Method of Least Squares and the Central Limit Theorem. See

Life

& Work. See also L. Daston (1988) Classical

Probability in the Enlightenment.

|

|

Carl Friedrich Gauss (1777-1855)

Mathematician, physicist and geodesist.

MacTutor

References SC, LP. Gauss is generally regarded

as one of the greatest mathematicians of all time and his contributions to

the theory of errors

were only a small part of his total output. Gauss spent most of his working

life at the |

1830-1860 This

period saw the emergence of the statistical society, which has been on

the stage ever since, although the meaning of “statistics” has changed and the

beginning of a philosophical literature on probability. It saw also the

beginning of the most glamorous branch of empirical time series analysis, the

sunspot cycle.

·

Since the 1830s there have

been statistical societies, including the London

(Royal) Statistical Society Wikipedia

and the American

Statistical Association (now the world’s largest). The Statistical Society of Paris

was founded in 1860. The International

Statistical Institute Wikipedia

was founded in 1885 although there had been international congresses from 1853.

Statistics were

facts about human populations and in

·

Since 1840, or so, there

has been a philosophical literature on probability. The English

literature begins with the extensive discussion of probability in John Stuart

Mill’s System of Logic (1843). This was followed by The

Logic of Chance (1862) of John

Venn, the Principles of Science (1873) of W. Stanley Jevons

and the Grammar of Science (1892) of Karl Pearson.

The American scientist/philosopher C.S.

Peirce (Stanford)

wrote extensively on probability, although he was not much read. There was an

overlapping literature on logic and probability.

De

Morgan can be placed here as well as Boole

(LP) whose An Investigation into the

Laws of Thought, on which are founded the Mathematical Theories of Logic and

Probabilities (1854) contained a long discussion of probability. Later

figures are mentioned below. There were German and French

literatures as well but philosophical probability was less international than

mathematical probability. See Porter’s Rise of Statistical

Thinking.

·

In 1843 Schwabe observed that sunspot activity is periodic. There followed

decades of research, not only in solar physics but in terrestrial magnetism,

meteorology and even economics examining series to see if their periodicity

matched that of the sunspots. Even before the sunspot craze there was intense

interest in periodicity in meteorology, tidology, and other branches of observational physics and, by

the end of the century seismology, was becoming important. Both Laplace and Quetelet had analysed meteorological

data and Herschel had written a book on the

subject. The techniques in use varied from the simple, such as the Buys

Ballot table, to the sophisticated, forms of harmonic

analysis.

At the end of the century the physicist Arthur Schuster

introduced the periodogram.

However, by then a rival form of time series analysis, based on correlation

and promoted by Pearson, Yule, Hooker

and others, was

taking shape. For an account see J. L. Klein (1997) Statistical

Visions in Time,

|

Lambert Adolphe Jacques Quetelet (1796-1874)

Astronomer and statistician. MacTutor

References

SC, LP, ESM. Adolphe Quetelet studied at the |

1860-1880 Two

important applied fields opened up in this period. Probability found a

major new application in physical science, to the theory of gases, which

developed into statistical mechanics. Problems in statistical mechanics were behind many

of the probability advances of the early C20. The statistical study of heredity developed into

biometry and many of the advances in statistical theory

were associated with this subject. There were important geographical

changes, as important work in probability

started to come from

·

In 1860 James

Clerk Maxwell used the error curve (normal distribution) in the

theory of gases; he seems to have been influenced by Quetelet via

John

Herschel’s review of the Letters on Probability. Boltzmann and Gibbs

developed the theory of gases into statistical

mechanics.

·

Galton

inaugurated the statistical study of heredity,

work continued way into the C20 by Pearson and Fisher.

Correlation was the most distinctive contribution of this “English” school. See

Stigler (1986): Part III, A Breakthrough in Studies of Heredity.

·

By contrast, the so-called

“continental direction” investigated the appropriateness of simple urn models

for treating birth and death rates by considering the stability of the series

of rates over time. Wilhelm Lexis Theorie der Massenerscheinungen

in der menschlichen Gesellschaft (1877). Bortkiewicz Markov Chuprov and Anderson all worked in this tradition. See Stigler

(1986): Chapter 6, Attempts to revive the Binomial, C. C. Heyde & E.

Seneta I. J. Bienaymé: Statistical Theory Anticipated, 1977 and Sheynin ch. 15.1.

·

‘Higher’ statistics entered

psychology and economics.

For psychology see Fechner. In economics W. Stanley Jevons

(Wikipedia

MacTutor

New School)

(SC) saw himself as continuing the work of the political

arithmeticians of 1650+. In the intervening two centuries

much had been done and Jevons’s work on index numbers was

inspired by the theory of errors, while his research on economic time series

was inspired by the work of meteorologists on seasonal variation and of

physicists on the solar cycle and its terrestrial correlates. (see above) Jevons also tried to link his mathematical economic

theory (see utility)

to statistical analysis—a project revived in the econometrics of the C20.

See Stigler (1986) and T. M.

Porter The Rise of Statistical Thinking 1820-1900 (1986).

|

|

Ludwig Boltzmann (1844-1906)

Physicist. MacTutor

References.

MGP.

LP. Boltzmann, with Gibbs,

was responsible for transforming Maxwell’s

probabilistic theory of gases into statistical mechanics. Boltzmann was

awarded a doctorate from the |

|

|

Gustav Theodor

Fechner (1801-1877) Wikipedia Physicist and psychologist. SC. Fechner went to the |

|

|

Francis Galton (1822-1911) Man of science MacTutor

References

SC, LP, ESM. After studying mathematics at |

|

P. L. Chebyshev (1821-94) Mathematician.

MacTutor

References.

SC, LP. Chebyshev was

one of the most important of C19 mathematicians and probability formed only a

small part of his output. He had predecessors in |

1880-1900 In this period the English statistical school

took shape. Pearson was the

dominant force until Fisher displaced

him in the 1920s. The school dominated statistics until the Second World War.

T. Schweder’s Early Statistics in the Nordic Countries considers

why this did not happen in

·

Galton introduced correlation and a

theory was rapidly developed by Pearson, Edgeworth, Sheppard and Yule. Correlation was major departure from the statistical

work of Laplace and Gauss, both as a technique and because of the applications

it made possible. It became widely used in biology, psychology and social

science.

·

In economics Edgeworth developed

some of Jevons’s ideas, most

notably on index numbers. However, economic statistics in

|

|

|

|

F. Y. Edgeworth (1845-1926) Economist and

statistician. MacTutor

References.

SC, LP, ESM. Edgeworth studied classics at

Trinity College Dublin and Balliol College Oxford. From around 1880 he

followed dual careers in economics and in statistics. Edgeworth seems to have

been self-taught in mathematics and he made a thorough study of the subject

and remained very well read. He began in statistics by subjecting the casual

statistical methods of Jevons to rigorous examination and started what turned

out be made a long involvement with index numbers (see

“Money” in his Papers

relating to Political Economy, vol. 2). However, most of his

extensive publications in statistical theory were not motivated by

economic applications, or direct applications of any kind. In 1892

Edgeworth, prompted by Galton, examined correlation and

methods of estimating correlation coefficients. Another concern, which led to

a stream of papers, was with generalisations of the normal distribution, as

in e.g. his 1905 paper “The law of error”. The Edgeworth expansions

that came from this research are now associated with distributions of

estimators and test statistics but Edgeworth originally envisaged these

distributions used for data distributions, as an alternative to the Pearson curves.

Edgeworth’s starting point was Laplace. Much of his work

was not followed up, like his 1908/9 papers “On the probable errors of

frequency-constants” which anticipated some of Fisher’s large sample theory for maximum likelihood.

Unlike his contemporaries, Pearson in statistics and Alfred Marshall in

economics, Edgeworth founded no school. From 1891 he was professor of

political economy at |

|

|

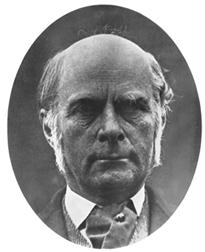

Karl Pearson (1857-1936)

Biometrician, statistician & applied mathematician. MacTutor

References.

SC, LP, ESM. Karl Pearson read mathematics at |

1900-1920 In the years before the Great War of 1914-18 probability and statistics were expanding in all directions. During the war research in statistics and probability almost stopped as people went into the armed services or did other kind of war work. Pearson, Lévy and Wiener worked in ballistics, Jeffreys in meteorology and Yule in administration. For the mathematicians’ traditional role in war, see The Geometry of War.

·

In

1900 David

Hilbert proposed a set of problems

for the C20. The 6th was, “to treat … by means of axioms,

those physical sciences in which mathematics plays an important part; in the

first rank are the theory of probabilities and mechanics.” Measure

theory which would have a key

role in the axiomatisation of probability was being created by Borel, Lebesgue

and others—see below.

·

From different subjects

came contributions that eventually found a place in the theory of stochastic

processes. In physics Einstein

and Smoluchovski

(see Cohen’s

History of Noise) worked on Brownian motion. Bachelier

(see Bru

& Taqqu) developed a similar model applied to financial

speculation—that application was a sleeper until the 1970s.

The actuary Lundberg developed a theory of collective risk. Malaria and the migration of mosquitoes were

behind Pearson’s interest in the random walk problem.

Mathematical models of epidemics were developed

by Ronald Ross

and A. G.

McKendrick MacTutor

without reference to the earlier work of Daniel Bernoulli.

·

Mendel did not use probability in his work on genetics

(published 1866) but his ideas were

probabilised as Pearson, Yule and Fisher

investigated how far his principles could rationalise the findings of the

biometricians.

·

Correlation began to be

important in psychology, largely through Charles Spearman

(1863-1945). Amongst his contributions to statistics were rank correlation and factor analysis. Godfrey Thomson

was a severe critic of Spearman’s factor analysis of intelligence. In the 1930s

Louis L.

Thurstone developed a multiple factor analysis.

·

In economics,

especially in the

·

Industrial

applications of probability begin

with Erlang’s

work on congestion in telephone systems, the ancestor of modern queuing theory

·

Institutional

developments include the creation in 1911 of the Department of Applied Statistics at UCL

headed by Pearson. Also in

See Hald (1998, Part IV)

and von Plato (ch. 3) “Probabilities in Statistical Physics.” For

developments in economics see M. S. Morgan A History of Econometric Ideas,

|

G. Udny Yule (1871-1951) Statistician. MacTutor

References.

Wikipedia |

|

A. A. Markov (1856-1922) Mathematician. MacTutor References. SC, LP. Markov spent his working life at the University of St. Petersburg. Markov was, with Lyapunov, the most distinguished of Chebyshev’s students in probability. Markov contributed to established topics such as the central limit theorem and the law of large numbers. It was the extension of the latter to dependent variables that led him to introduce the Markov chain. He showed how Chebyshev’s inequality could be applied to the case of dependent random variables. In statistics he analysed the alternation of vowels and consonants as a two-state Markov chain and did work in dispersion theory. Markov had a low opinion of the contemporary work of Pearson, an opinion not shared by his younger compatriots Chuprov and Slutsky. Markov’s Theory of Probability was an influential textbook. Markov influenced later figures in the Russian tradition including Bernstein and Neyman. The latter indirectly paid tribute to Markov’s textbook when he coined the term Markoff theorem for the result Gauss had obtained in 1821; it is now known as the Gauss-Markov theorem. J. V. Uspensky’s Introduction to Mathematical Probability (1937) put Markov’s ideas to an American audience. See Life & Work There is an interesting volume of letters, The Correspondence between A.A. Markov and A.A. Chuprov on the Theory of Probability and Mathematical Statistics ed. Kh.O. Ondar (1981, Springer) See also Sheynin ch. 14 and G. P. Basharin et al. The Life and Work of A. A. Markov. |

|

|

‘Student’ = William Sealy Gosset (1876-1937)

Chemist, brewer and statistician. MacTutor

References.

Wikipedia

|

1920-1930 Many of the people who would dominate probability and

statistics over the following decades, first made an impact. Of them, the

individual who had the greatest hold over his subject was Fisher

in statistics. The ascendancy of Fisher was also the ascendancy of the

English language. While German was the international scientific language of the

time—and the language of probability—Fisher and his followers rarely referred

to literature in German, believing that that literature ended with Gauss, although Helmert

was later added to the canon. Thus standard works, like those by Czuber,

did not cross the Channel.

·

In probability advances included refinements of the central limit theorem

(here Lindeberg

made an important contribution) and the strong law of large numbers

(which went back to Borel

in 1909 and Cantelli

in 1917) and new results including the law of the iterated logarithm.

There were contributions from most countries of Continental Europe, e.g. Mazurkiewicz

from

·

The

foundations of probability received much

attention and certain positions found classic expression: the logical

interpretation of probability (degree of reasonable belief) was propounded by

the Cambridge philosophers, W.

E. Johnson, J.

M. Keynes and C.

D. Broad, and presented to a scientific audience by Jeffreys; Keynes was influenced by the German

physiologist/philosopher J. von Kries.

The frequentist

view was developed by von Mises.

·

The Modern (Evolutionary)

Synthesis of Mendelian genetics

and Darwinian natural selection involved the solution of problems involving

stochastic processes, e.g. branching

processes. However, the work

did not have as much influence on the development of probability theory as

similar work in physics; see Fokker-Planck

equation. The

principal contributors to the modern synthesis,

Fisher, J. B. S. Haldane

and Sewall

Wright (path

analysis), all contributed to statistics, but Fisher was in a class

apart.

·

In statistics

R. A. Fisher generated many new ideas on estimation and hypothesis testing and

his work on the design of

experiments moved that topic from the fringes of statistics to the centre. His Statistical Methods for

Research Workers (1925)

was the most influential statistics book of the century.

·

W.

A. Shewhart ASQ Wikipedia

pioneered quality control, which

became a major industrial application of statistics.

See Hald (1998, ch. 27 and passim) and von Plato (ch. 4-6)

|

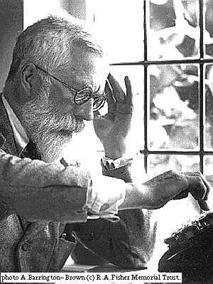

By permission of Fisher Memorial Trust |

|

|

|

Paul Lévy (1887-1971) Mathematician.

MacTutor

References.

MGP.

LP. In the late C19 French

mathematicians continued to work on probability but Bertrand

and Poincaré

made no advances comparable to those made by Laplace and his

contemporaries, nor did they conserve the rich tradition. Major

mathematicians, including Borel

(see normal number)

and Fréchet, wrote on probability in the early C20 but Lévy became

the leading French probabilist. Lévy was originally interested in analysis

(see functional analysis)

and he only started publishing on probability around 1920. In 1920 Lévy was

appointed Professor of Analysis at the École Polytechnique, a position he

held until 1959. He revived characteristic

function methods (the name is his) and used them in his work on the stable laws and the central limit theorem.

This work was summarised in his influential Calcul des Probabilités (1925).

In the 1930s he focussed on the study of stochastic processes, in particular martingales and Brownian motion. Théorie de l'addition des variables

aléatoires (1937) Processus

stochastiques et mouvement brownien (1948). See also Annales

(including nice photos) and Rama Cont. See also von Plato passim. Paul Lévy,

Maurice Fréchet : 50 ans de correspondance

mathématique (eds. Barbut,

Locker & Mazliak) includes

a review of Lévy’s work in probability.

Lévy’s student Michel Loève moved to |

|

|

Richard von Mises (1883-1953) Applied

mathematician. MacTutor.

References. MGP.

SC, LP. Mises was educated at the

Technische Hochschule in |

|

|

Harold Jeffreys (1891-89) Applied mathematician and physicist. MacTutor

References.

SC, LP. Jeffreys has a good claim to be

considered the first Bayesian statistician in that he used only Bayesian methods. Jeffreys

arrived to study mathematics at |

|

|

Norbert Wiener (1894-1964) Mathematician.

MacTutor

References.

MGP.

SC, LP. Wiener’s working life was spent

at MIT. He was well-travelled, having studied at Harvard, |

|

Aleksandr

Yakovlevich Khinchin (1894-1959)

Mathematician. MacTutor

References

MGP Publications.

Khinchin was a student at Moscow State University

and spent almost all his working life there. Khinchin, like Lévy

and Doob, started in analysis. The university had a very

strong analysis group and Khinchin’s supervisor was Luzin.

There was no tradition of work in probability until, that is, Khinchin and Kolmogorov created one. There do not seem to have been any

personal links with the Chebyshev/Markov

tradition at |

1930-1940 Against a calamitous economic and political background there were important developments in probability, statistical theory and applications. In the Soviet Union mathematicians fared better than economists or geneticists and in the early years they could travel abroad and publish in foreign journals; thus Kolmogorov and Khinchin published in the main German periodical, Mathematische Annalen GDZ. In Germany Jews were barred from academic jobs from 1934.

·

In probability

the main developments were Kolmogorov’s axiomatisation of probability and the

development of a general theory of stochastic

processes by him and Khinchin. This work is

usually seen as marking the beginning of modern probability. See von Plato (ch. 7) “Kolmogorov’s measure

theoretic probabilities.” Most of the activity was in the Soviet Union and

·

In the foundations of probability Bruno de

Finetti and Frank Ramsey’s (1903-1930) (St.

Andrews, N.-E.

Sahlin) work on subjective probability appeared. Ramsey started by

criticising the

·

In

·

In statistical

inference the main development was the Neyman-Pearson theory of hypothesis testing from

1933 onwards. Multivariate analysis

became an identifiable subject, formed out of such contributions as the Wishart distribution

(1928), Harold

Hotelling’s principal

components (1933) and canonical

correlation (1936) and Fisher’s discriminant analysis

(1936).

·

Applications of mathematics

and statistics to economics came together in the

econometric

movement. This could look back to the C17 political arithmetic and the C19 work

on index numbers

and on Pareto's law

but econometric modelling, which involved the application of regression methods to

economic data, was a C20 development. Among the leaders in the 1930s were Jan

Tinbergen and Ragnar

Frisch. Econometricians who have been followed them as Nobel laureates

in economics include Engle, Granger, Haavelmo, Heckman, Klein, McFadden. Equally important were developments in the

collection of economic information. In the

|

|

Andrei

Nikolaevich Kolmogorov (1903-87) Mathematician. MacTutor

References.

MGP.

LP. Kolmogorov was one of the most

important of C20 mathematicians and although he wrote a lot of probability it

was only a small part of his total output. Like Khinchin,

he was a student of Luzin

at |

|

Jerzy Neyman (1894-1981) Statistician. MacTutor

References.

NAS

ASA

MGP.

SC, LP, ESM. Neyman was educated in the tradition of Russian

probability theory and had a strong interest in pure mathematics. His

probability teacher at |

|

|

Harald Cramér (1893-1985) Mathematician,

statistician & actuary. MacTutor

References.

MGP.

Photos SC, LP. Personal

recollections Statistical

Science 1986. Cramér

studied at the |

|

|

Bruno de

Finetti (1906-85) Mathematician, actuary & statistician. MacTutor

References

Wikipedia

LP. De Finetti studied mathematics in |

|

|

William

Feller (1906-70) Mathematician. MacTutor

References.

MGP.

About half of Feller’s papers were in probability, the rest were in

calculus, functional analysis and geometry. After a first degree at the |

|

|

J. L. Doob

(1910-2004) Probabilist. MacTutor

References.

MGP. SS

Interview. Because Wiener was not part

of the probability community, Doob was the first “modern probabilist” from

the |

1940-1950 Among the millions who died in the Second World War

were mathematicians and statisticians. Doeblin

is only the best known of those killed; one of Neyman’s books

is dedicated to 10 lost colleagues and friends. Yet

this war, unlike the First World War, promoted the study of statistics and

probability. At the end of the war there were many more people working in

statistics, there were new applications and the importance of the subject to

society was more widely recognised.

·

The Nazi persecutions and

the Second World War drove many statisticians and mathematicians to the

·

The war brought many people

into statistics and probability. Savage and Tukey

are examples from the US while in Britain the recruits included Barnard

(MGP),

Box (MacTutor)

(MGP), Cox

(MGP),

D.

G. Kendall (MGP),

Lindley

(MGP).

The recruits were often better trained in abstract mathematics than earlier

statisticians. This contributed to closing the gap between the English

statistical and the Continental probability traditions.

·

The war generated research

problems out of which came Wiener’s

work on prediction and Wald’s on sequential analysis and

the new subject of operations

research. Governments’ need for information led to great expansion

in the production of official statistics. In

·

Between 1943 and –6 three

advanced treatises on statistics appeared, by Cramér, M.

G. Kendall and Wilks

(MGP).

These works did much to consolidate the subject and thereby professionalise it.

·

Nonparametric methods

began to be systematically studied, using tools from the theory of statistical

inference; E. J. G.

Pitman was an important pioneer. The tests often came originally

from non-statisticians, like Spearman (rank correlation) or Wilcoxon

(Wilcoxon tests).

The existing repertoire of sign

test, permutation

test and Kolmogorov-Smirnov

test was soon expanded.

·

Modern time series analysis

came from the union of the theory of stochastic processes (see Khinchin

and Cramér), the

theory of prediction (Wiener and Kolmogorov)

and the theory of statistical inference (Fisher and Neyman) with harmonic analysis and correlation among the

grandparents. (above) One of the

main pioneers of the 40s was M. S. Bartlett.

In the 50s Tukey was a leading contributor, in the 60s Kalman (Kalman filter) and

systems engineers made important contributions and in the 70s the methods of G. E. P. Box Interview

and G. M. Jenkins

(Box-Jenkins) were

adopted in economics and business.

|

|

Abraham Wald (1902-1950)

Statistician. MacTutor

References.

MGP.

LP. Wald studied at the |

|

|

C. R. Rao (b. 1920) Statistician. MacTutor References

ASA MGP.

Recent

photo. Rao is the most distinguished member of the Indian statistical

school founded by P.

C. Mahalanobis and centred on the Indian Statistical Institute

and the journal Sankhya.

Rao’s first statistics teacher at the |

1950-1980 Expansion continued—more

fields, more people, more departments, more books, more journals! Computers began to have an impact—see below for more

details.

·

Existing departments were expanded:

e.g. in 1949 a second chair of statistics was created at LSE filled by M.

G. Kendall. Bowley (above) only ever had a staff

of 2, E. C. Rhodes

and R. G. D. Allen. New institutions were created, e.g. the

Statistical Laboratory at Cambridge in 1947 and the Statistics Department

at Harvard in 1958.

·

The scope of probability theory increased with the emergence of new

sub-fields such as queueing

theory and renewal

theory. Feller’s Introduction to

Probability Theory made a very strong impact on in the English-speaking

world; it promoted the study of the subject and made advanced topics, like Markov chains,

accessible.

·

In 1950 the

logician/philosopher Rudolf

Carnap published a major work, Logical Foundations of

Probability which advanced a dual interpretation of probability, as degree

of confirmation, which looked back to Cambridge (see Jeffreys),

and as relative frequency, which looked back to von Mises.

Probability was an important topic for other philosophers of science, including

Hans Reichenbach

and Karl

Popper. More recently philosophers have been attracted to the

monism of de Finetti’s subjectivism. Alan Hajek’s Interpretations

of Probability in the Stanford Encyclopedia of

Probability reviews the modern scene.

·

In statistics

there was a Bayesian revival. In

·

W.

Edwards Deming (ASQ)

Wikipedia

(LP) continued Shewhart’s work on quality control and was

very effective in getting industry to adopt these methods.

·

There was a great expansion

in medical statistics and epidemiology. Austin Bradford

Hill was an important contributor to both fields: he pioneered randomised clinical trials and, in work

with Richard Doll,

demonstrated the connection between cigarette smoking and lung cancer.

·

Laplace

and Quetelet saw the work of the census as a possible

application of probability but the use of statistical theory by official data

gatherers only became institutionalised through the activities of Morris Hansen

(interview)

at the US Census Bureau.

·

Since

the 50s finance

has been an important area for applied decision theory: the 1990 Nobel Prize in

economics was awarded to Markowitz

for work that was influenced by Savage although the idea of

expected utility goes back to Daniel Bernoulli. Since the 70s

finance has been an important area for applied stochastic processes. Ito had developed his stochastic calculus in

the 40s but it was applied in an unexpected way in the Black-Scholes model for

pricing derivatives. Scholes

and Merton

received the 1997 Nobel Prize in economics for their contribution (see Black-Scholes formula).

The intellectual ancestor of stochastic finance was Bachelier (above).

·

In 1973 the Annals of

Mathematical Statistics (see above) split into the

Annals of Probability and the Annals of Statistics. This represented

increasing specialisation—there were weren’t many new Cramérs—as well as the need to expand journal pages.

|

|

Leonard

Jimmie Savage (1917-71) Statistician. MacTutor

References.

MGP

LP. After training

as a pure mathematician and obtaining a PhD from the |

|

|

John W. Tukey (1915-2000) Statistician. MacTutor

References.

ASA

and Bell Labs.

MGP. Tukey

originally trained as a topologist (see finite character and Zorn's lemma) but

became a statistician in the Second World War. He remained in |

1980+ Instead of describing people from the very recent past, I describe the effect the computer has had on statistics from its advent, around 1950 and the changes in the writing of the history of probability and statistics in recent decades.

The effects of the computer. The changes following the introduction of the computer have been much more radical than those following the increased use of mechanical calculating machines at the end of the C19. Such machines provided the material basis for Pearson and Fisher’s research and for the construction of their statistical tables in the period1900-50. The machines were not in general use and Fisher assumed that most of the users of the tables and the “research workers” who read his book would use logarithm tables or a slide rule. For general background see A Brief History of Computing.

With the availability of

computers old activities took less time and new activities became possible.

·

Statistical tables and

tables of random numbers first became much easier to produce and then they

disappeared as their function was subsumed into statistical packages.

·

Much bigger data sets could

be assembled and analysed.

·

Exhaustive data-mining became

possible.

·

Much more complex models

and methods could be used. Methods have been designed with computer

implementation in mind—a good example is the family of Generalized

linear models linked to the program GLIM; see John Nelder FRS.

·

In the early C20 when Student (1908) wrote about the normal mean and Yule

(1926) about nonsense correlations they used sampling experiments and in the

1920s it became worthwhile to produce tables of random numbers. With the

introduction of computer-based methods for generating pseudo random numbers

much more ambitious Monte-Carlo

investigations (introduced by von

Neumann and Ulam)

became possible. The Monte-Carlo experiment became a standard way of

investigating the finite sample behaviour of statistical procedures.

·

Since around 1980

Writing history. In recent

decades there has been a flood of works—books and articles—on the history of

probability and statistics from statisticians, philosophers and historians. I

will mention a few titles in each category to indicate the range of activity.

·

50 years ago the standard

general works were Todhunter for the history of probability,

Walker

for statistics with an emphasis on psychology and education and Westergaard

for statistics with an emphasis on economic and vital statistics.

Helen M.

Walker (1929) Studies in the History of Statistical Method, Baltimore: Williams &

Wilkins.

Harald

Westergaard (1932) Contributions to the History of Statistics,

·

E

S Pearson got history moving in

F. N. David (1962) Games, Gods and Gambling:

the Origins and History of Probability and Statistical Ideas from the Earliest Times

to the Newtonian Era.

E. S. Pearson

(ed) (1978) The History of Statistics in the 17th and 18th Centuries against

the Changing Background of Intellectual, Scientific and Religious Thought:

Lectures by Karl Pearson given at University College, 1921-1933.

·

Oscar Sheynin, Anders

Hald and Stephen Stigler

(see above) have been the leading contributors to the

technical literature. Sheynin has published many articles, mainly in the

Archive

for History of Exact Sciences. There is a list of Hald’s

history writings here.

Some of Stigler’s articles are reprinted in

Stephen M

Stigler (1999) Statistics on the Table: The History of Statistical Concepts

and Methods,

·

Much

neglected work has been rediscovered. Bienaymé’s

(

C. C. Heyde

& E. Seneta (1977) I. J. Bienaymé: Statistical Theory

Anticipated,

S. L.

Lauritzen (2002) Thiele: Pioneer in Statistics,

A. Hald (1998)

A History of Mathematical Statistics from

1750 to 1930,

·

Among

the philosophers

are Hacking and von Plato; as well as the books referred to above,

see

I. Hacking

(1990) The Taming of Chance

·

In recent decades the history and sociology of science have flourished. T. S.

Kuhn’s Structure of Scientific Revolutions (1962) had a

strong influence on both fields. Among the works on probability and statistics

by historians and sociologists are

T.

M. Porter (1986) The Rise of Statistical Thinking 1820-1900, Princeton:

L. Daston

(1988) Classical Probability in the Enlightenment. Princeton:

The Probabilistic

Revolution, volume 1 edited by L. Krüger, L. J. Daston and M. Heidelberger,

volume 2 edited by L. Krüger, G. Gigerenzer and M. S. Morgan Cambridge, Mass.:

MIT Press (1987) contents

D. A. MacKenzie (1981) Statistics

in

There is a review essay of Daston

and Krüger et al. by MacKenzie in

·

Much

effort has gone into making important texts available

S. M. Stigler and I. M. Cohen American Contributions to Mathematical Statistics in the Nineteenth Century, contents (includes work by Adrain, De Forest, Newcomb, B. Peirce, C.S. Peirce (Stanford) and others)

H. A. David & A. W. F.

Edwards (eds.) (2001) Annotated

·

Post-1940

developments have not attracted the attention of historians yet. Some classic modern

contributions are reprinted (with commentary) in S. Kotz & N. L. Johnson

(Editors) (1993/7) Breakthroughs in

Statistics: Volume I-III New York Springer Amazon.

Statistical Science has been publishing interviews for the past 20 years and

these are a form of living history—there must be 100 by now; the post-1995

issues are available through Euclid.

Econometric Theory

also publishes interviews and articles on history; among the statisticians

interviewed are T. W. Anderson

and J.

Durbin.

·

Probability

and statistics now appear as topics in textbooks on the history of mathematics

and the history of disciplines that use probability and statistics. See for

example

V.J. Katz

(1993) A History of Mathematics,

M. Cowles

(2001) Statistics in Psychology: An Historical Perspective,

·

Articles

on the history of probability and statistics appear in several journals

including, Archive

for History of Exact Sciences Biometrika British Journal of the History of

Science Historia

Mathematica International Statistical Review Isis Journal

of the History of the Behavioral Sciences Statistical Science.

·

In

2005 a specialist online journal was

launched, Electronic

Journal for History of Probability and Statistics/Journal Electronique

d'Histoire des Probabilités et de la Statistique.

Online bibliographies

The JEHPS lists Publications in the History of Probability and Statistics. The current lists cover 2005-9.

Stigler’s History of Statistics has suggestions for further reading and an extensive bibliography. Hald’s History of Mathematical Statistics from 1750 to 1930 also has a very valuable bibliography.

There are several online bibliograpies. Two of the bibliographies were compiled in the mid-90s but are still useful—a brief one by Joyce, restricted to secondary sources, and an extensive one by Lee

Oscar Sheynin References

Giovanni Favero Storia della Probabilità e della Statistica

David

Joyce History

of Probability and Statistics

Peter M Lee The History of Statistics: A Select Bibliography

Online texts

Through the web many of

the important original texts are now easily accessible. The following open

access sites are very useful.

DML:

Digital Mathematics Library retrodigitized Mathematics Journals and Monographs

Life

and work of Statisticians

SAO/NASA ADS Astronomy

journals

Gallica particularly good for French literature